Going Serverless

Going serverless? What does that mean?

The following blog post is about the DX Lab’s new cloud architecture, so it is more technical compared to our other posts. However, we feel it is just as important to share this research and to document our decision making, just as we do with all our experiments. In fact, this has been an experiment in itself; cloud technology moves so fast that even this blog post needed updating while being written.

Headless in the Cloud

It has been a couple of years since we last updated the DX Lab’s cloud infrastructure. We embraced a ‘headless’ architecture, keeping backends and frontends as separate applications. As discrete units, we found this to be much easier to update and maintain.

Despite this separation, we still had most of our stack on one server. There are various reasons for this (we’ll go into it later), but we started to outgrow this approach.

Single Server

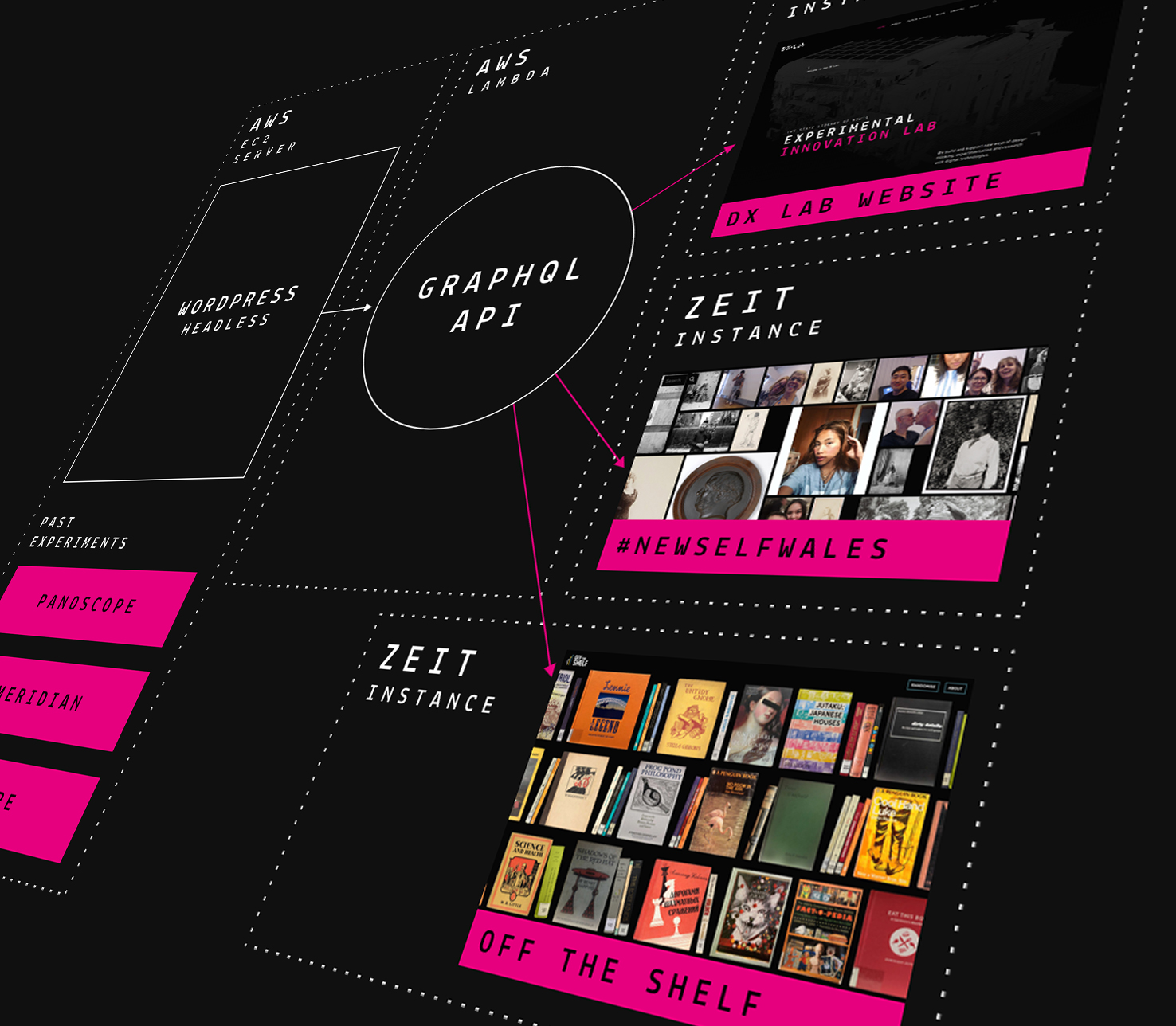

We ran our previous architecture mainly from one server on Amazon Web Services (AWS) cloud platform.

This AWS EC2 instance (EC2 stands for Elastic Compute Cloud, a fancy term for a web server) served the majority of our tech stack:

- WordPress

- ‘Headless’, only using the admin and API features of WordPress

- GraphQL API

- Our central data API. Built with Node JS and Express.

- DX Lab Website

- Next JS and React, using Node JS

- Past Experiments

- Almost all the early experiments from here

While most of the stack was on one server, our applications behaved more like ‘microservices’, where each component is independent of one another and can be deployed and updated separately.

We actually have two servers with this exact setup, one is for ‘production’ while the other is for ‘staging’.

In addition to this AWS EC2 server, we also use a cloud provider called Zeit to host some of our recent experiments:

Accidents do Happen

Last year, a certain Technical Lead accidentally upgraded the staging server’s operating system (from Ubuntu 16.04 to 18.04). This surprising upgrade finished after 2 hours of downloads and package updates. The good news was the server still worked. The bad news was that we lost complete admin access to the server, preventing future deployments and upgrades. This problem is a known issue on AWS’s forums, and the easiest option for us was to rebuild the server completely.

Luckily this incident happened on staging and not on production. However, it did highlight specific issues with our architecture. Being so reliant on one server meant that it became a single point of failure, so we took this opportunity to review our approach.

New Architecture

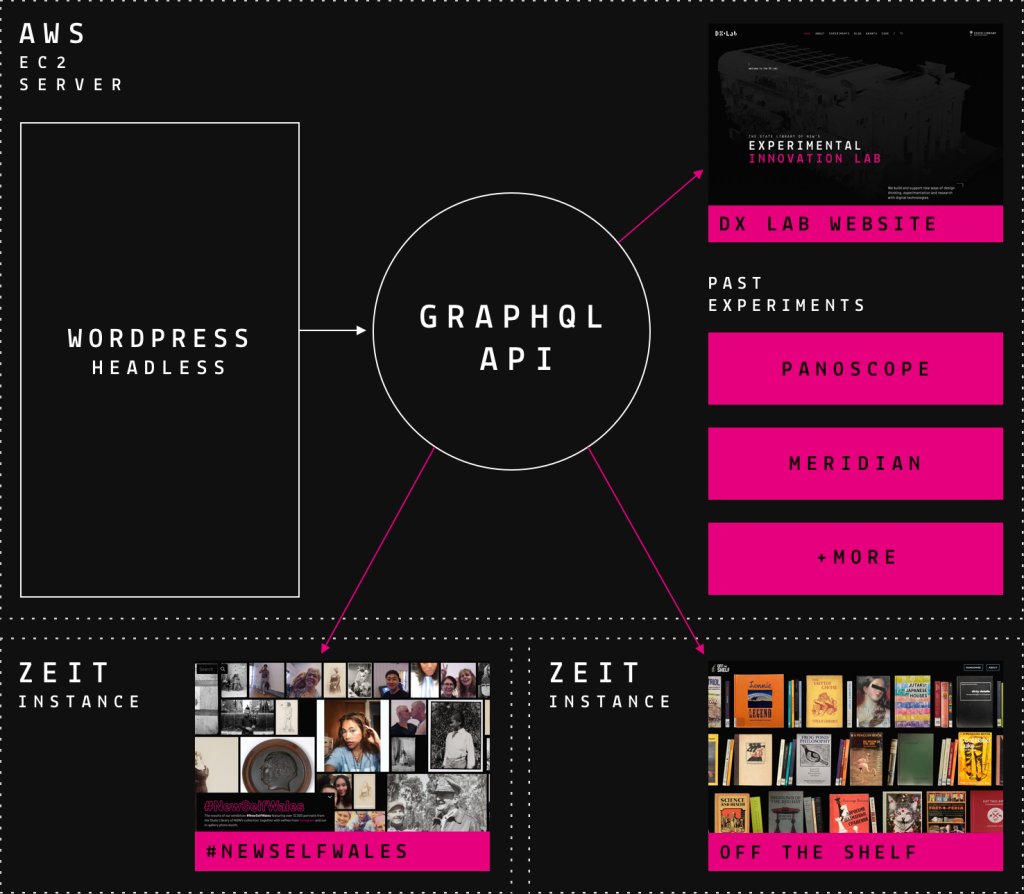

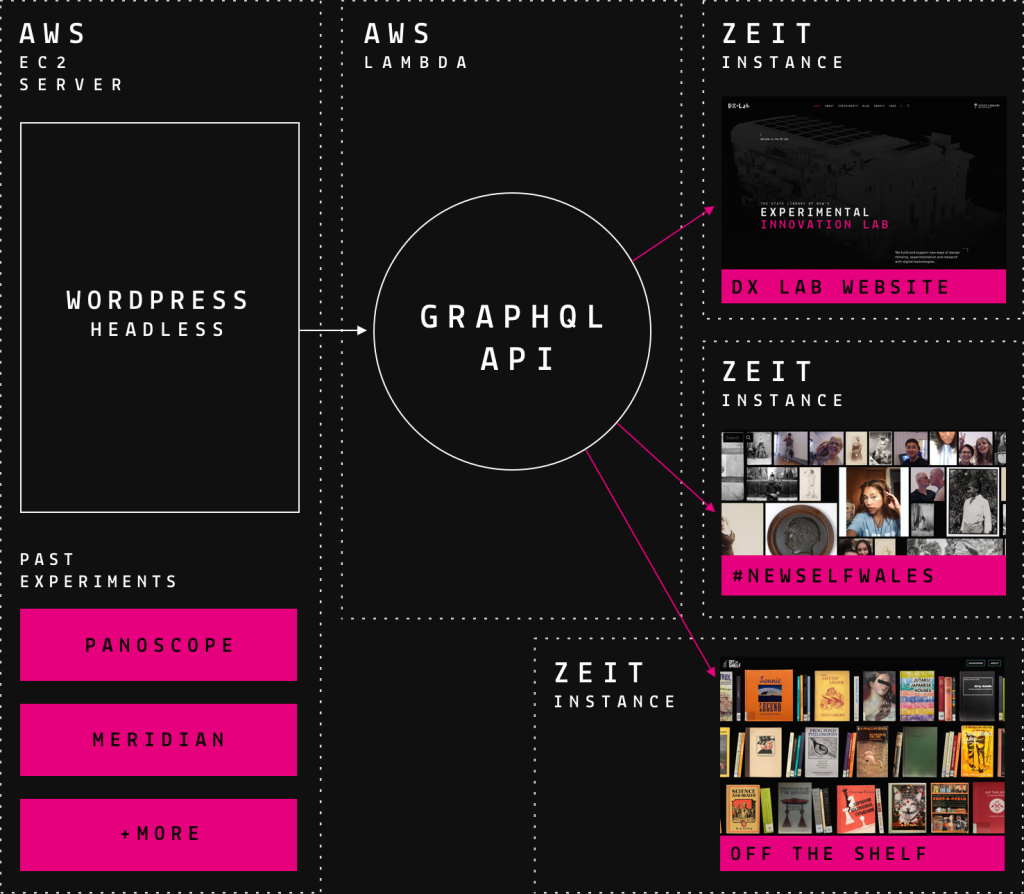

Our rebuilt AWS EC2 server remains. However, it is only responsible for:

- WordPress

- Headless approach again, only using the API and admin features

- Past Experiments

Time for Zeit

The DX Lab website has been moved away from AWS to Zeit. We’ve previously used this next-generation cloud provider for complex projects such as #NewSelfWales, and it has served us well.

This ‘serverless’ platform abstracts away a lot of the pain of managing our servers. Benefits include:

- On-demand usage, only pay for what you use (as opposed to servers that run even when there is no one using it)

- Automatic server upgrades

- Better security (no long-running servers to hack into)

- Auto-scaling up and down

Zeit also has a few in-built features we liked:

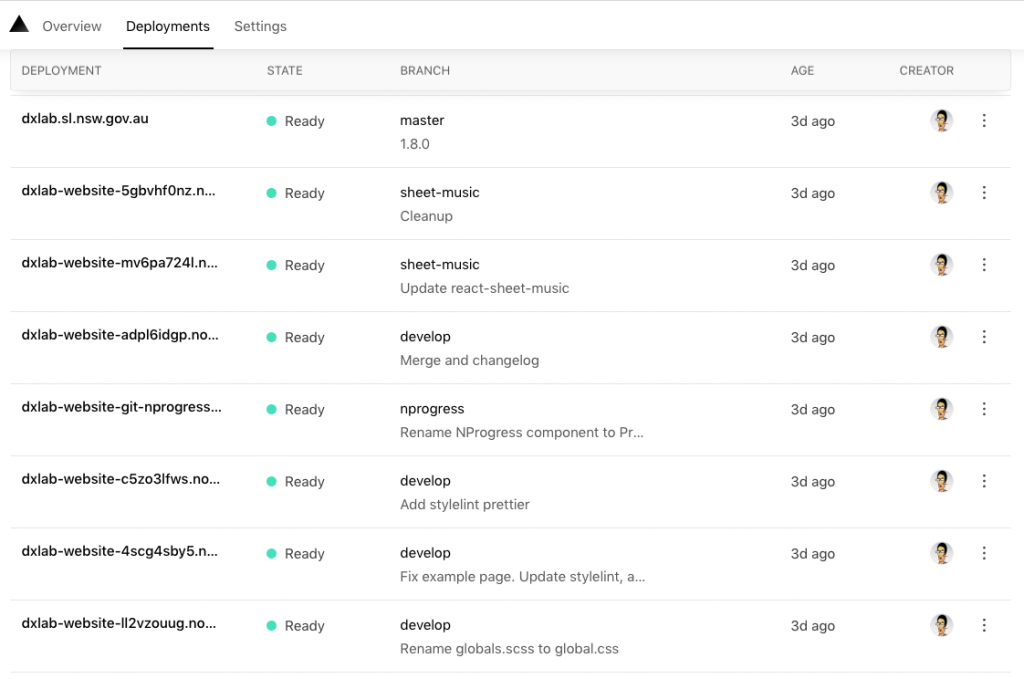

- Free SSL – makes website traffic secure and required by some browsers

- Automatic http to https redirects

- ‘Immutable’ deployments – every deployment has a unique domain name. Deploying to production simply involves pointing the domain name (e.g. example.com) to the unique one (e.g. example-xyz123.now.sh). If a mistake is made, we can easily rollback by pointing the domain to the previous deployment.

- Github integration – any changes to our source code are automatically deployed to Zeit with a unique URL

- New deployment for every commit – makes it simple to share upcoming features with the team

Not in our Domain

For the DX Lab website, we actually wanted to go with Zeit a little earlier. Unfortunately, they could only handle ‘apex’ domains, e.g. ‘example’ is the apex in ‘shop.example.com’, while ‘shop’ is a subdomain. As we are in the New South Wales government, our apex is ‘nsw’ and our domain needed to be ‘dxlab.sl.nsw.gov.au’ (dxlab being the sub-sub-domain of nsw). To use Zeit, the entire New South Wales government would have to hand over control of the domain – this was obviously not an option.

With a bit of to and fro with the Library’s IT team and Zeit’s support staff, we finally managed to get ‘dxlab.sl.nsw.gov.au’ pointing to Zeit’s platform.

Next JS

We built our website with Next JS, an open-source framework that Zeit maintains and is, of course well suited to their platform. Next JS can be hosted on other platforms too, for example, the Library’s new Collection Beta uses Next JS but is hosted on AWS Lambda.

AWS Lambda is similarly a ‘serverless’ environment where code is only instantiated when it is being used. Once again, no server maintenance is required and scaling up or down happens automatically.

Micro-Frontends

We did consider using AWS Lambda for the DX Lab website. However, Zeit has all the in-built features listed above, as well as superior developer experience over AWS. There is also a convenient ‘rewrite’ feature where you can control where clean URLs proxy to.

To illustrate:

- dxlab.sl.nsw.gov.au – this is the website you are on

- dxlab.sl.nsw.gov.au/blog/going-serverless – same website and page you are currently on

- dxlab.sl.nsw.gov.au/newselfwales – internally rewrites to dxlab-newselfwales.now.sh

- dxlab.sl.nsw.gov.au/visionaries-explorer – internally rewrites to wp.dxlab.sl.nsw.gov.au/visionaries-explorer

- + more experiments that run on a variety of technologies and have their own mini-stack

This structure allows us to have a somewhat ‘micro-frontend’ architecture, where clean URLs on dxlab.sl.nsw.gov.au actually rewrite to other servers and even hosting providers, hiding underlying systems. Over the years, we have built up a variety of experiments in various places. We can update these independent of the main DX Lab website and vice versa.

Static Site Generation

While writing this blog post, Zeit added a long-awaited feature called static site generation to Next JS. It had the potential to speed up our website significantly, so we explored this feature and managed to get it working.

Our previous website fetched data from our GraphQL API for every page visit. However, we found the home page had complex data requirements and did not load as fast as we’d like.

Static site generation works by fetching data while building and deploying a website and then rendering it as flat HTML pages. When a reader accesses the site, they don’t need to wait for data as they get the flat HTML page straight away.

Benefits:

- 10x faster

- Old website load time ~4 seconds (Check it out here – https://dxlab-website-old-slow-demo.now.sh)

- New website load time 400ms

- Resilience: WordPress or our API could be down, and our website would still work

- Leverage global Content Delivery Networks (CDN): Static pages can be easily replicated across the world, so pages requests do not have to travel too far to get content.

One disadvantage of static site generation is that content updates in our CMS (WordPress) do not show up on the static site, without a manual build and deploy.

Fortunately, Zeit provides a special URL we can visit to trigger an automatic build and deploy of the site. We then set WordPress up to request this URL whenever any content updates are made. The new content appears on the site a minute or two later.

GraphQL API

The DX Lab website, along with our recent experiments all get data from a central GraphQL API server. This is a next-generation API that sits in front of other APIs, a convenient one-stop shop for our data needs. This GraphQL API was previously on the same server as the website and WordPress.

While it makes sense to also host our GraphQL API on Zeit (along with the new website), Zeit is deprecating a feature we need – bidirectional communication. Also known as ‘websockets’, this allows a browser to not only send data to a server but receive new data in realtime. The most common use case for websockets is a chat application.

This is a fairly advanced feature, but we needed it for #NewSelfWales’ never-ending home page image feed – where it relies on a central server sending new images to ‘subscribed’ browsers.

Luckily, AWS recently announced support for websockets on AWS Lambda last year. We experimented with a proof of concept, and it worked exceptionally well. Migration from AWS EC2 to AWS Lambda was reasonably straightforward, mainly because we had already experimented with AWS Lambda, using the Serverless framework.

The new Collection beta API and frontend application also use AWS Lambda and Serverless (the framework, not the concept, yes, it is confusing).

Gone Serverless

The above diagram illustrates the advantage of our new architecture. Having split our stack into more discrete components, we no longer have one server that could be a single point of failure. We can update each component without affecting the others, leading to better resilience.

While having some parts of the stack in Zeit and others in AWS isn’t ideal, it is a trade-off we were willing to make – we picked the best tool for the job on each platform.

We’ll evaluate the new architecture over the coming months, but we now have stronger foundations for the future.

Comments

Thanks for the tech info. Always interesting to see how people get things to work behind the scenes.

Loving the We Are What We Steal pages.

Small point, looks like Zeit is now called Vercel.