Facial recognition research

The Young Creative Technologist Award is a unique opportunity for a young person to undertake an innovative project of their choice. This award is proudly supported by Macquarie Group, providing an opportunity to create an innovative digital experience utilising some of the Library’s 10 million digitised images.

Vignesh Sankaran was the first winner of this award and here are the results of his research on facial recognition using the Library’s Sam Hood collection.

What made you apply for the award?

VS: It was an opportunity to try out building a new project from the ground up. Working on this experiment with new technologies is exciting for me since it’s an opportunity to start afresh and set the direction as I see fit.

Describe your research and the outcomes you were hoping to achieve?

VS: My initial goal was to build up a platform that could analyse images and store the results in a backend database. From there, a search index would use the results to facilitate searching for faces in the image collection. This would require another system that assigned names to the faces..

Naturally, this was quite an ambitious project with lots of moving parts, so I scaled it back to gear it more towards Library staff. The application would show the bounding boxes of recognised faces and be able to search the image collection for faces similar to a selected face. My goal was to also provide an automated way for Library staff to add more images to the application’s image collection.

In the end, I built a system that focused on showcasing AWS Rekognition’s facial detection and recognition abilities on a sample of images from the Sam Hood collection. Going through this process taught me the value of refining the research idea to its core idea, and that research doesn’t necessarily require a full-blown production system as an end result to be successful in achieving its goal.

What did you end up building?

VS: I built a web application that demonstrates the capabilities of facial detection and recognition with AWS Rekognition. The main page of the web application is a gallery that shows the images that have been analysed by AWS Rekognition.

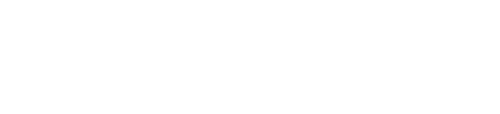

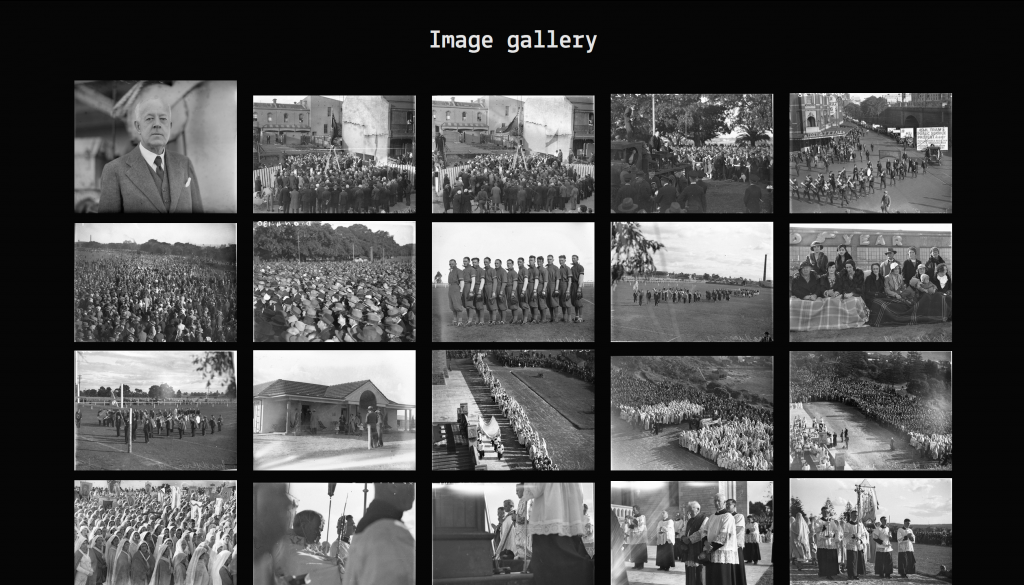

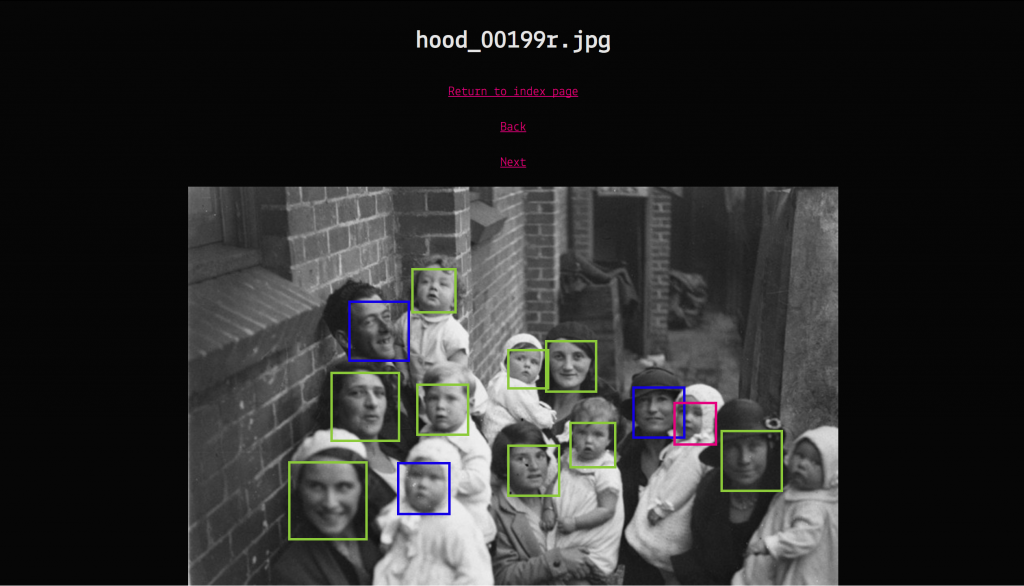

Clicking on an image shows the results of the facial detection with the bounding boxes around the detected faces. Bounding boxes coloured in dark blue are faces that have had similar faces detected in the sample image collection, with a 95% degree of confidence.

When a dark blue bounding box is clicked, images in which the similar face has been detected are shown below, and the bounding box for that face turns pink in the images where it has been detected.

What technologies have you used?

VS: I used Amazon technologies to form the backbone of the web application. AWS Rekognition was used to detect faces and search for similar faces in the entirety of the provided image collection. The results of the facial analysis were stored as JSON files in S3, alongside the images themselves.

API endpoints for the front end were built with the Serverless framework in Node JS, and were hosted on AWS Lambda. Serverless handled the deployment and configuration details, and was quite easy to use. The front end was built with React JS, which I found to be a complementary technology to Node JS.

What worked well and what didn’t work?

VS: One of the things that worked well was the technology stack used for this project. The Serverless framework made it easy to build and deploy back end applications with Node JS. The AWS platform was also well suited to this project, since it reduces the amount of effort otherwise spent focused on deployment and maintenance.

One thing that didn’t work as well was attempting to build Node JS applications without unit testing. Dynamic languages are very flexible, and I found it difficult to stay focused when there was a myriad of ways to move forward. By setting up test-driven development, I was better able to set a path for development and stay focused on the task at hand.

Debugging Serverless handlers was also something that didn’t work well. Unfortunately, the endpoints needed to be deployed to AWS in order to test them, and if there was a bug in the handler, the API response would only show up as an internal server error. This problem was worked around by using the AWS CloudWatch logs to ascertain where in the Serverless handler the issue was arising.

How do you think this might be useful for the Library?

The State Library is always interested in exploring their collection in different ways and this project provides another dimension for exploration. The Sam Hood collection has over 30,000 images and being able to see which similar faces appear where throughout the collection is an exciting proposition.

You can check out Vignesh’s results here.